How To Fix Failed Social Media Previews

One day your website's social media previews work great, the next they do not—what happened?

Social media such as Twitter, Pinterest, Facebook, LinkedIn, and even Google Plus use a very basic "scraping" procedure to convert web pages to previews. As of recently, these processes exclusively look for og (open graph) meta tags in your content to create the previews. This makes it less tedious for the bots from these sites to index the millions of webpages shared by users daily.

So why is your website not showing a preview? Or more importantly, I will solely deal with why your website/page was showing previews earlier but not anymore.

Previews can still be made without using og tags.

It's true, even though og tags have become an internet standard since maybe 2011(or earlier), they are still not the only way previews are made. Meta tags and descriptions can act as a "failsafe" in case you are not currently using open graph tags. However, these bots are lazy and will supplement the lack of open graph or meta tags by scraping content in the order loaded. So, previews, even without open graph tags, should still be made automatically.

Sow what is the issue then?

The problem, unfortunately, is not with the social networks in these situations. Rather, it is an issue with your server (not even your website). Luckily, there are is usually a very easy solution!

You see, like a human visitor, the scrape process involves the social network downloading the entirety of the page you have submitted, then scraping through the content to generate a preview.

However, if the bot is denied access to the source code (for whatever reason), it will usually return an error such as fakebook's "Curl error: 28 (OPERATION_TIMEOUTED)" error. Similar development tools will likely create similar errors (usually a CURL error).

How do you fix it?

Fixing the error is simple (hopefully), you see the issue is probably that your firewall (whether site-side or server-side) has blocked these scraping bots in a "false positive" [a type 1 error]; restricting their access to your source code.

So, the answer to the question it hand is simple: you must reset your firewall. If you are using a shared host, contact your hosting provider to do this for you. If you are using your own, simply reset the firewall through the given prompt.

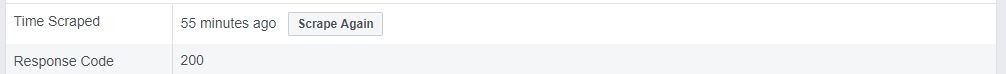

Even if you are using YOAST on WordPress, you need to reset the firewall or manually add all the scrape bots to your "exceptions" list. When you do, the developer tool will hopefully give you a status "200" good return http code.

When you subscribe to the blog, we will send you an e-mail when there are new updates on the site so you wouldn't miss them.